The A.I. Executive Briefing is an expert weekly curation of A.I. news by our research team, shared externally now because we feel there’s too much hype & noise in the market. Feel free to forward; the sign-up is at the end.

NEWS ROUND-UP

The new Justice League of A.I.

Amazon takes ownership of AI model Distribution

The Fall of Stack Overflow and the Death of Originality

Open-source from Open AI

Stability AI isn't going anywhere

Hard times for Hardware

VENTURES NEWS

Dell acquires venture-backed AIOps startup Moogsoft

Protect AI, a suite of AI-defending cyber tools, raises $35M in Series A

Unstructured, which offers tools to prep enterprise data for LLMs, raises $25M in Series A

AutogenAI, a generative AI tool for writing bids and pitches, raises $22.3M

FedML, who is combining MLOps tools with a decentralized AI compute network, raises $11.5M in Seed Round

FairPlay, a “Fairness-as-a-Service” AI, Raises $10M in Series A

News Round-up

1. The new Justice League of A.I.

The USA has been relatively quiet regarding Frontier AI laws. Most recently, Biden invited leaders from 7 AI companies to the White House in order to discuss “Voluntary” commitments to self-regulation. In response to this request, four of the leading AI companies have come together to form the new Justice League of AI with the self-stated goal to draft standards and guides for all fellow AI companies to follow as they quietly compete for the other's market share and extract as much value out of the end user as possible.

OpenAI, Google, Microsoft, and Anthropic have banded together to lay out best practices and collaborate with public and private stakeholders alike.

Why this matters: Will our newly appointed oligarchical AI champions squash new entrants with fresh rules and guidance on how to build AI systems? Or will open source (that means training code, pre-training data, fine-tuning preferences, etc.) become the norm and the standard?

2. Amazon takes ownership of AI model Distribution

Amazon has launched Bedrock, a platform for the creation of conversational AI agents. In order to help the end user build their agents, Amazon has made a variety of LLMs available to be selected from their library. Anthropic’s Claude 2 text-generating AI, Stable Diffusion XL 1.0, and the foundation model of Cohere (specifically “Command text generation” and “Cohere Embed”) are all available via Bedrock.

Why this matters: By leveraging all of the functional LLMs that are already open-sourced, Amazon can abstract away its need to build the best model and instead focus on building the best place to use the models to construct Conversational AI agents.

3. The Fall of Stack Overflow and the Death of Originality

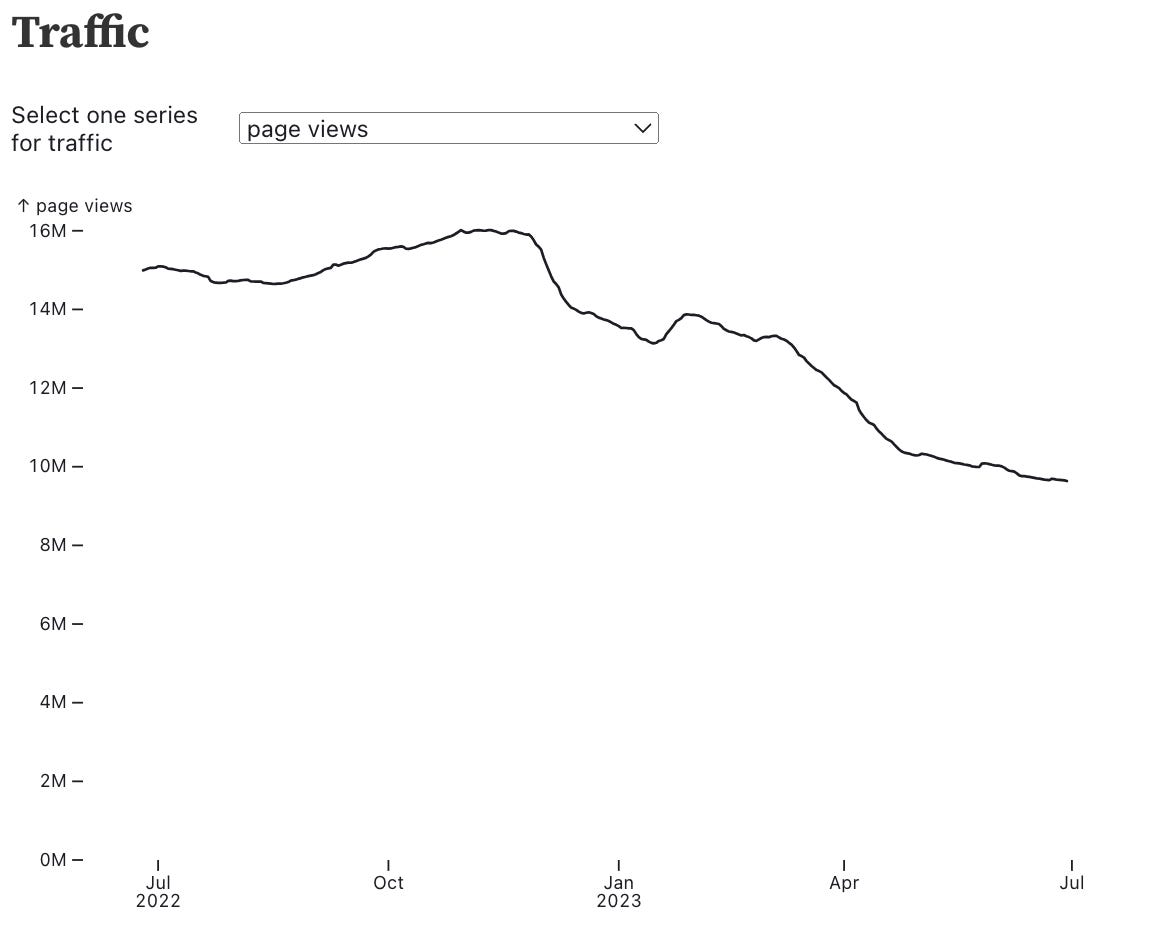

Over the 18mo, Stack Overflow has lost around 35% of its traffic and a 50% decrease in the number of questions & answers. The driving factor here is thought to be the shift in software engineers seeking guidance from forums like Stack Overflow to private chat boxes with different coding-focused LLMs.

We are entering an interesting world where LLMs need forums like Stack Overflow to exist in order to build a model that can help Engineers debug and write code, but the existence of such LLMs are causing the decline of the forums themselves. The original content of coders seeking advice from other coders will decrease as people stop using the internet to commune with other people and instead spend more time communing with bots on the internet. This negative catch-22 that is unfolding is also playing out in a variety of text and image/video content all over the internet as LLMs generate an increasing amount of content available on the open internet.

Why this matters: We have passed the moment where we can depend on the internet for seemingly original content. A growing percentage of the internet will be generated by LLMs and this will degrade the quality of any future AI trained on the open internet in a similar fashion to how the current suite of open-source LLMs have been trained.

4. Open-source from Open AI

Speaking of open source, have you heard of G3PO? It is slated to be OpenAIs new open-source model. Although it was slated for release over two months ago, companies like Meta, Stability, and Huggingface are taking up a majority of the open-source market share with their models that are already available and being adopted by builders.

We should not be surprised if it doesn't get released. OpenAI isn't afraid to cut their losses on projects that do not work. They seem to have deprecated their AI content detection tool, AI Classifier, given that it was inaccurate. This news is not surprising given the bias seen in non-native English speakers by AI detection tools incorrectly asserting that original writings done by humans are actually the work of an AI.

Why this matters: The open-source LLM movement has been gaining steam, especially with releases from companies such as Meta, Stability, and HuggingFace. Distracted by building out a marketplace for customizable models and a personalized chatGPT assistant (as well as rising competition from these players mentioned before) it is questionable if and when G3PO will ever be released.

5. Stability AI isn't going anywhere

Last week we were correctly bullish on Stability AI releasing new features and products again after a hiatus likely related to the current squabble between the founders of the company. With that in mind, there is a slew of updates from the Stability AI team confirming that they do still have a generative horse in this AI race.

Firstly, they have released two new opensource LLMs. Stability's Carper AI team developed two new language models called FreeWilly1 and Freewilly2. Both are based on Meta's open-source models LlaMa and LlaMa 2. Currently, both FreeWilly models are leading the way on Hugging Face, with FreeWilly 2 at the top of the list among all open-source models, surpassing even the original LlaMa 2 model in performance. Secondly, they have released the largest open model text-to-image generation model, SDXL 1.0. Check out some of the examples generated by the model here. It seems that the best use case of the stability AI image models is best utilized on inanimate objects, doodle translation, and advanced image editing. These features are all commonplace amongst the generative AI companies that are operating in this space.

Why this matters: The competition has been heating up in the LLM/GenAI space especially in the open-source category. We’re glad to see Stability AI showing a strong start in the LLM space and remaining relevant in the generative AI space, but we are hesitant to say whether they will come out on top as the dominant leader in either of the spaces.

6. Hard times for Hardware

It is very painfully obvious to those who have been reading Unwiring AI that the battle for high end computing chips is continuously heating up & the geopolitical tensions surrounding the topic seem to only get closer and closer to boiling over. There is far more demand than supply and it is causing new factories to be planned out and new types of chips to be produced.

Samsung is attempting to set up new manufacturing and is expanding production at its chip-manufacturing complex in Pyeongtaek, about 40 miles south of Seoul, as well as a chip factory in Texas. We need companies solely focused on setting up manufacturing hubs, as many of Samsungs peer companies are only focused on designing new types of chips to further increase efficiencies of the creation and running of their AI models.

One new chip, utilizing 800,000 human and mouse brain cells lab-grown into its electrodes has proven advanced learning capabilities when compared to traditional silicon. Dishbrain is not the first instance of fusing brains and microchips and it won't be the last. Dishbrain is currently being produced and sold by Cortical Labs, which is currently pioneering this space. Perhaps some of the alternative meat companies can sell their bioreactors to Corticol Labs in order to produce help something that is actually useful.

Why this matters: There is far more global demand than supply for chips, but we need more companies like Samsung that are focused solely on chip manufacturing than on chip design (e.g. Intel, Meta, Apple) to meet this demand.

Venture News

7. Dell acquires venture-backed AIOps startup Moogsoft

Now that LLMs have decreased the amount of time spent on application building, there is a secondary issue of validating the veracity and interoperability of containerized apps within an organization's overall technical architecture. This issue is expanding in tandem with the needs of DevOps teams to properly monitor and understand how code is being deployed and admin teams to monitor logs. In order to alleviate the pressure building in this sphere, Dell is acquiring Moogsoft (to be finalized later this year).

When technical problems arise in a company's network, there may be cascading effects that cause multiple issues at once. Moogsoft’s platform identifies and clusters related error warnings, making it easier for admins to diagnose and fix the overarching problem. The acquired company has patented algorithms that filter 99% of the data “noise” collected from a company’s systems. The platform then enriches the information that remains with contextual details to help DevOps and Admin teams proactively avoid issues that may arise.

8. Protect AI, a suite of AI-defending cyber tools, raises $35M

The idea of a Bill of Materials (BoM) has made its way into software, particularly when we are stringing together several pieces of software (opensource or private) that have their own unique dependencies and components. The ML and AI stack now has their own MLBoM, which is the data used for training, testing datasets, and code.

Protect AI has raised $35m on the back of its tool AI Radar, which produces an MLBoM to focus on cyber security during the building phase vs after an AI or ML app has already been deployed. Implementing clean security architecture and maintaining a clear understanding of the digital components of AI systems is the best way to protect users, companies, and applications from exploits in an increasingly hostile digital world.

9. Unstructured, which offers tools to prep enterprise data for LLMs, raises $25M in Series A

Have you ever finished a series of projects or tasks and realized that all of the information related to it is scattered across a variety of documents, file types, and applications? Well, that happens to government employees all the time. Unstructured’s primary open-source product is a suite of data processing tools.

The company develops processing pipelines for specific types of PDFs, HTML docs, Word docs, SEC filings, and even U.S. Army Officer evaluation reports. They have been awarded contracts from the U.S. Air Force, U.S. Space Force, and U.S. Special Operations Command to deploy an LLM with mission-relevant data. Unstructured raised $25m, in part because of their close work with the government. Glad to see the branches of the government embracing tried and tested open-source tools — Unstructured’s suite of tools has over 700k downloads and is currently used in over 100 organizations.

10. AutogenAI, a generative AI tool for writing bids and pitches, raises $22M

A common use case for LLMs is related to generating text for emails, summarizing information from meetings, and doing general research (barring any hallucinations). The evolution of LLMs and AI suggests that autonomous agents trained on proprietary company data will be the primary way of executing low-level tasks concerned with communications and summarizing information. A future state of this concept is the AI Sales Agent who is pitching deals based on meeting notes, emails, and client information. Autogen AI has raised $22M in order to increase pitch generation efficiency by 800%. The company seems to have essentially fine-tuned a GPT model and benchmarks its model's performance against the base GPT models.

11. FedML, who is combining MLOps tools with a decentralized AI compute network, raises $11.5M in Seed Round

MLOps as an extension of traditional DevOps is definitely a popular theme and is seen as a growing problem by many tech entrepreneurs. Beyond another platform that enables fine-tuning and further training of OpenAIs models, FedML also has a platform designed to train, deploy, monitor, and improve AI models on the cloud or edge. The interesting piece here is that they are building a network of users who can contribute computing power to help train and deploy models being built on the FedML platform. With over 10k devices currently contributing to their network, FedML has raised $11.5M in order to tackle the ever-present compute constraints that are a constant ire for the space.

12. FairPlay, a “Fairness-as-a-Service” AI, Raises $10M in Series A

Providing financial access to underserved communities is a common problem. As niche cultural communities have grown in size across the United States, traditional methods for underwriting lender risk do not apply as readily to newer cultural groups. The company has raised $10M off of their two API products, one to analyze bias within a lenders model and the second to essentially reopen previously rejected loan applications. The idea is that underserved communities can get access to financial products and that lenders can make more money — everybody wins. However, although this product is pitched as “Fairness-as-a-Service,” the other way to look at this technology is that it enables previously rejected loans to be sold to underserved communities stacking them with debt and interest payments solely to increase a lender's profitability and book size.

A more holistic approach would be to transparently redefine the concept of borrower creditability rather than hiding the revaluation behind an opaque model sold to the lenders. Increasing access to financial products to communities traditionally denied them is a huge win and we applaud the work done towards this goal albeit with the hesitation that these same groups can be exploited by the financial products they are being offered.

Reader Questions

Q: What is the difference between Meta’s CM3leon model and other models like Midjourney and Stability AI?

A: Unlike other image generators relying on diffusion, CM3Leon is a 5x more efficient transformer needing less compute & training data than the current standard of diffusion.

Stable Diffusion starts with random noise or static. Then it slowly "diffuses" that noise to turn the random noise into a nice smooth picture or image. The "stable" part means it diffuses steadily and carefully at each step. If you diffuse too fast, the image can get messy or not look real. Stable Diffusion takes its time so the final image looks better.

A transformer is a bit like a puzzle solver. It looks at all the small patches of an image - and figures out how they best fit together. For making a new picture, the transformer is given the text that describes the picture. It uses that description as clues to decide what puzzle pieces need to go where. For recognizing pictures, the transformer looks at the puzzle pieces and uses what patterns it has learned about to decide what the picture is showing.

Send us a message with any questions/comments/thoughts on anything A.I. related and we’ll try to answer them in our next release.